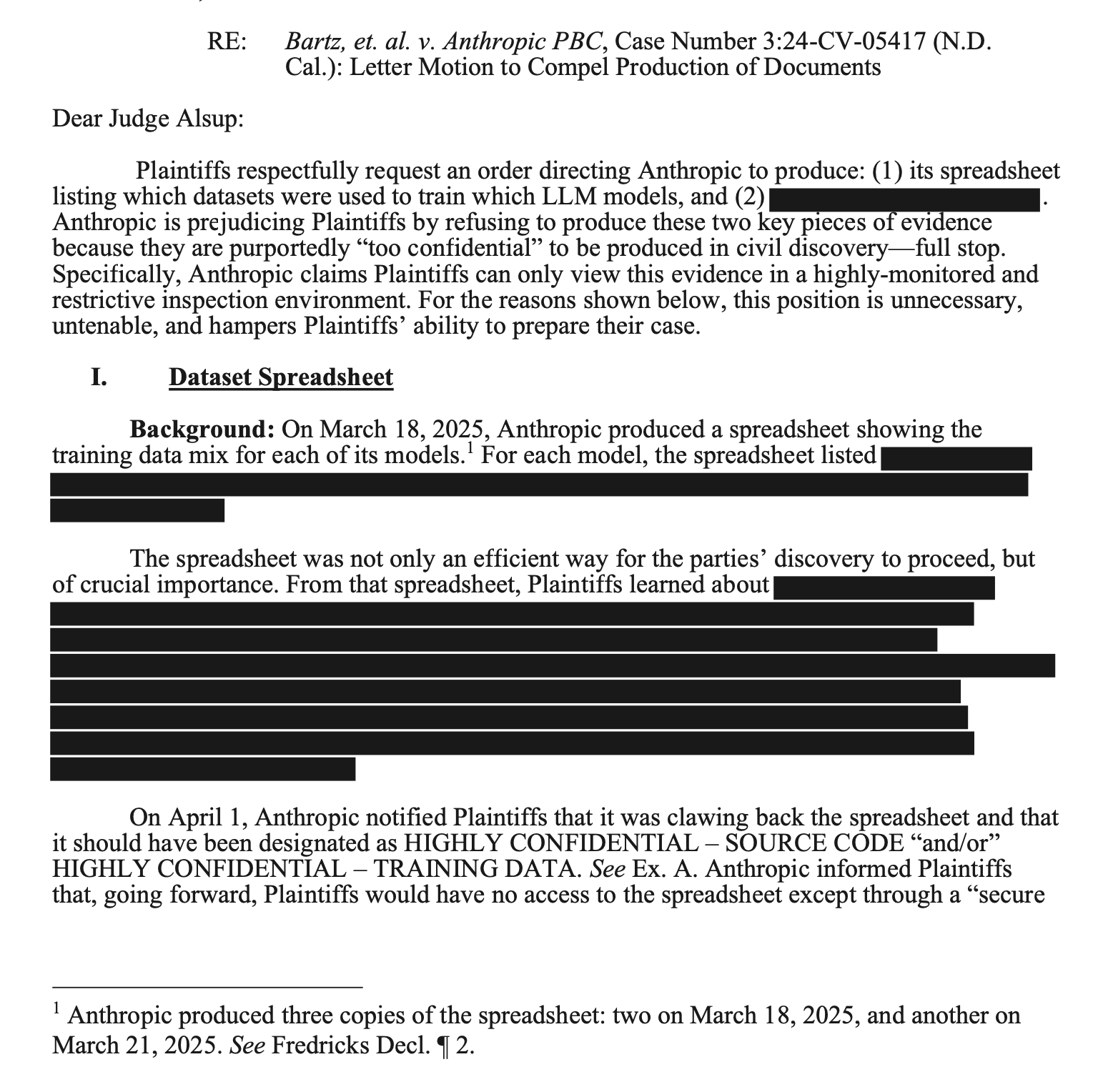

Anthropic has revealed it spent tens of millions of dollars to create a proprietary scanned books dataset used to train its Claude AI system. During legal proceedings in the Bartz v. Anthropic case on 9th June 2025, it emerged that Anthropic compiled a proprietary composition of books that Anthropic sourced, scanned, and created itself—a collection that the company claims is available to no other AI company in the world.

The case centres around Judge William Alsup's 23rd June 2025 ruling, which partially favoured Anthropic on fair use questions but drew a sharp distinction between using books for AI training and how those books were obtained and stored. Judge Alsup deemed the use of books for LLM training "spectacularly transformative" whilst rejecting Anthropic's defence of its acquisition and long-term storage of over seven million pirated books. The court differentiated between books lawfully purchased and then digitised—which it considered fair use—and books downloaded from pirate sites, which it ruled clearly infringing.

The Bartz v. Anthropic case may set a precedent in AI copyright law, providing a framework for how judges, regulators and companies approach copyright compliance in this rapidly developing area. While Judge Alsup's ruling is not binding outside the Northern District of California, it could influence other similar cases, such as those consolidated against OpenAI in the Southern District of New York. The decision makes clear that how copyrighted works are acquired and handled internally is just as important as how they are ultimately used.

Sources:

1.

2.

3.