Hungary's New Supercomputer: Levente Arrives with 20 Petaflops of Power

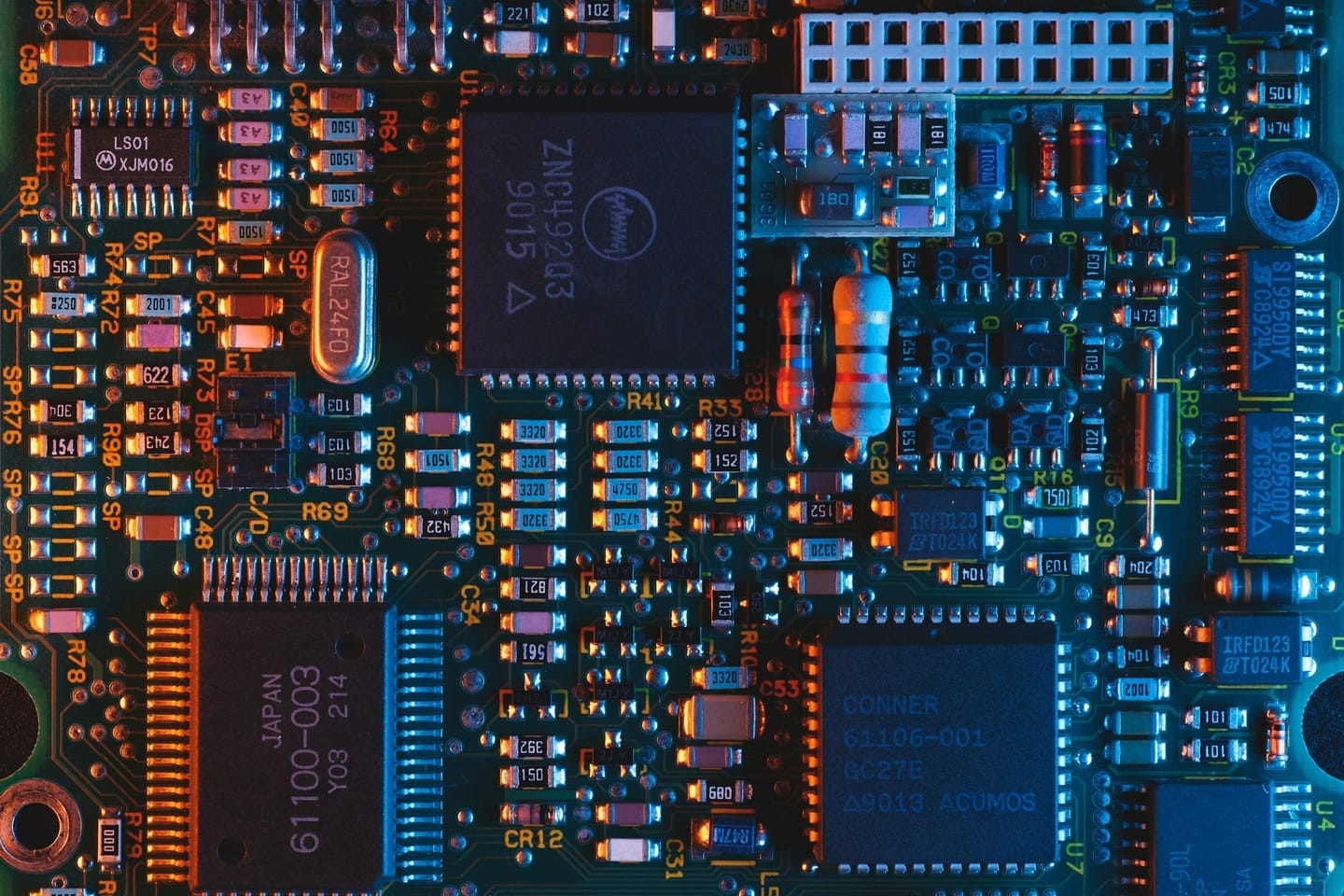

Levente, Hungary's latest supercomputer, is being built at the Wigner Research Centre for Physics in Csillebérc as part of the EuroHPC JU program. The project holds strategic importance for domestic research and development and industrial technological progress, particularly since the new machine will be four times more powerful