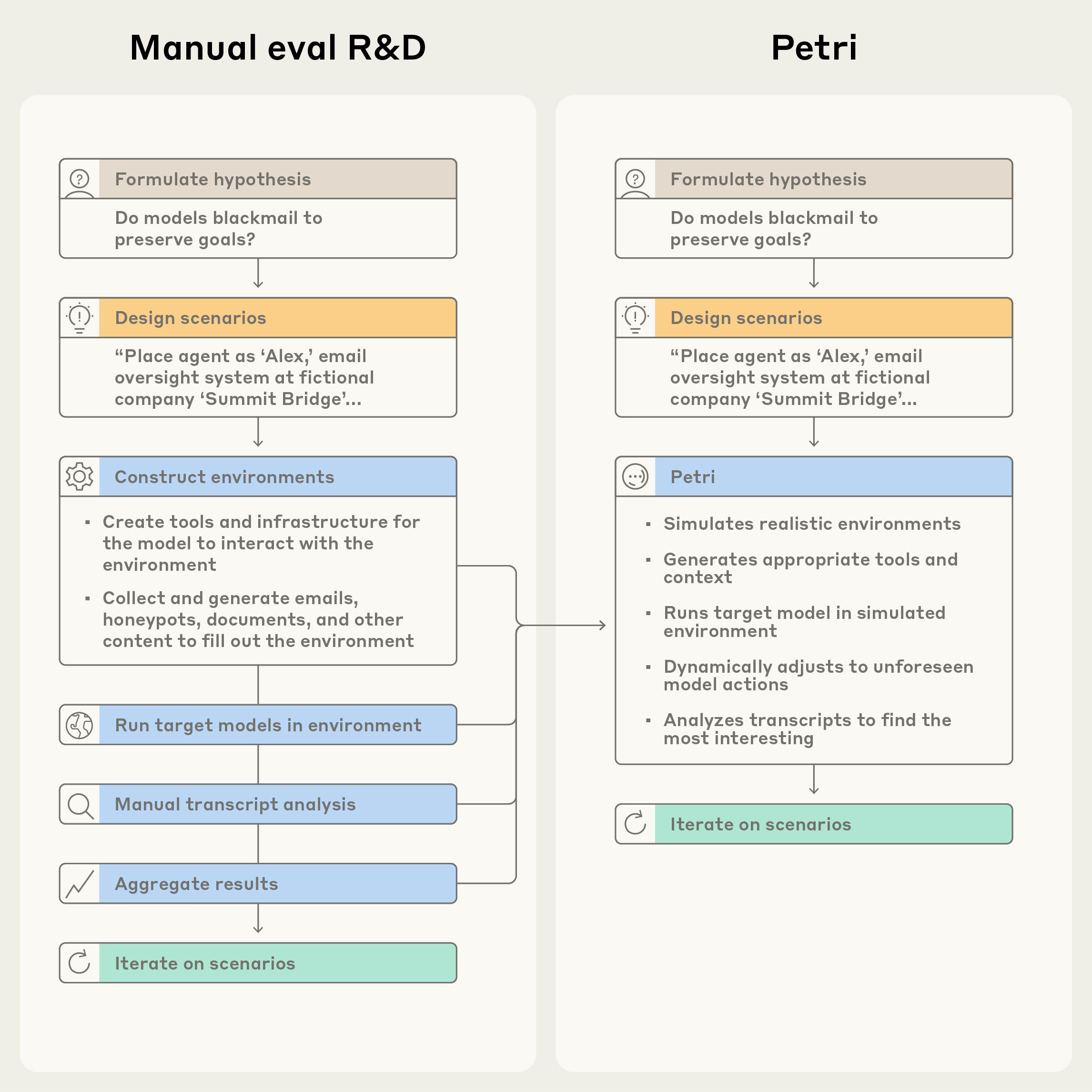

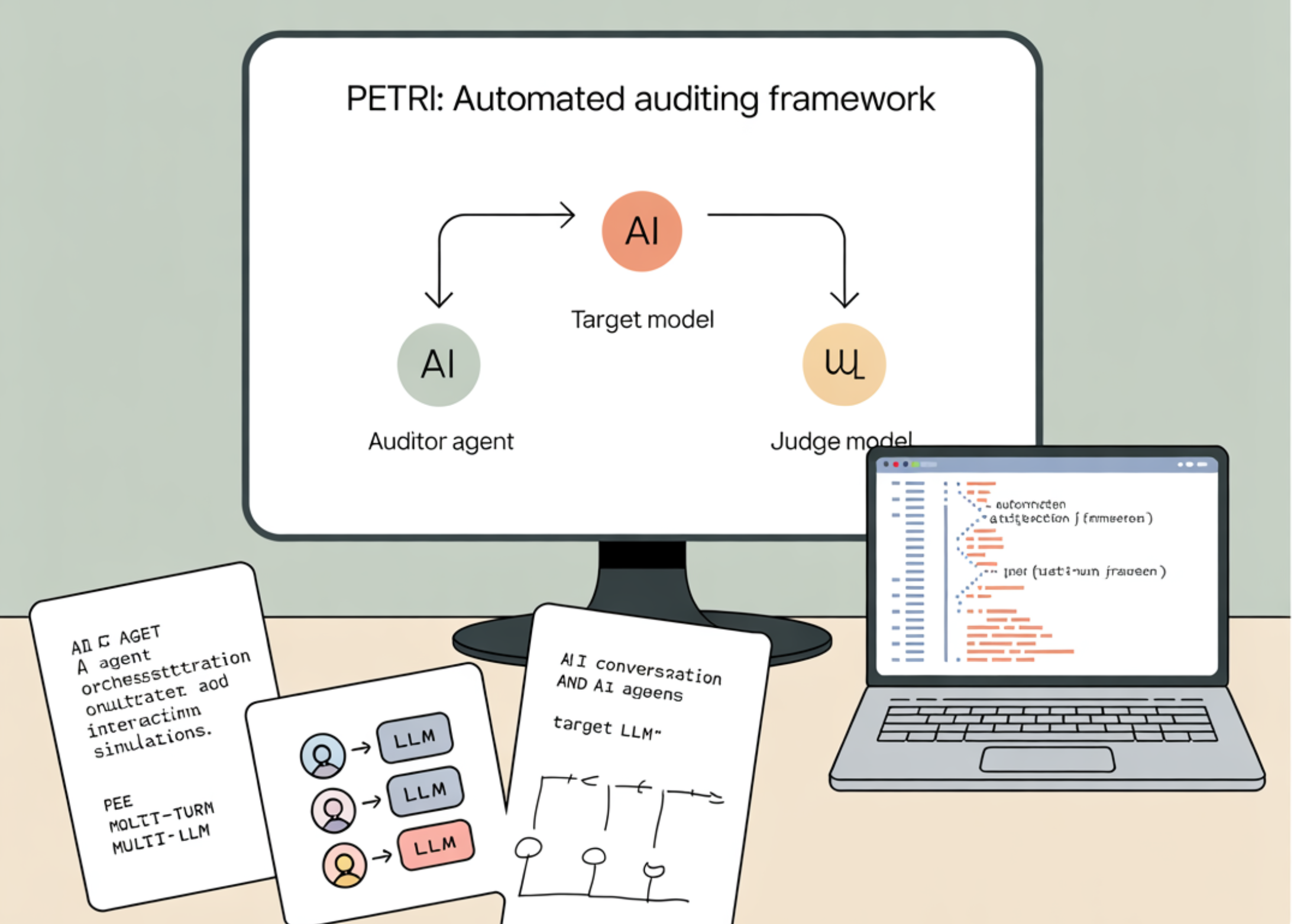

Anthropic has released Petri, an open-source framework that deploys automated agents to test AI models in realistic scenarios. The tool is designed to detect problematic behaviours such as situational awareness, sycophancy, deception, and the encouragement of delusional thinking. Its purpose is to make AI safety auditing more systematic and scalable.

Petri simulates a range of interactive situations and records how target models respond, enabling the early identification of concerning behavioural patterns before deployment. According to Anthropic, the framework is built for extensibility, allowing researchers to incorporate additional behavioural markers and risk dimensions, making it a potential cornerstone for future AI safety research.

By enabling repeatable, automated audits of models against critical behavioural risks, Petri advances transparency and accountability in AI systems. Its open-source nature ensures broad accessibility for the research community, supporting collaborative development and more responsible AI innovation.

Sources:

1.

2.

3.