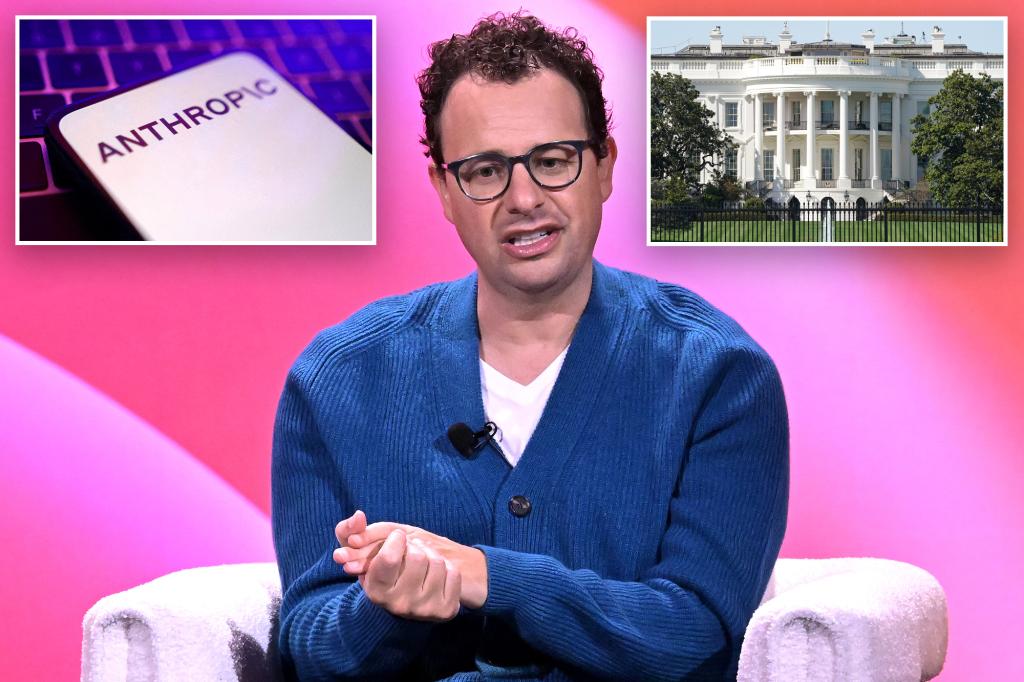

In September 2025, Anthropic formally announced that its AI models cannot be used for law enforcement or surveillance purposes in the United States, directly sparking conflict with the Trump administration. The decision is striking because the government is increasingly relying on AI technologies in national security and policing, while Anthropic – one of OpenAI’s main rivals – rejected such applications on ethical grounds.

The company’s “Constitutional AI” framework is built around democratic values and human rights, and its backers include the left-leaning Ford Foundation, known for promoting strong social responsibility in technology. A 17 September report by Semafor revealed that the White House expressed concern over Anthropic’s self-imposed rules excluding law enforcement uses, even as other companies – notably Palantir – actively market AI solutions to government agencies. Gizmodo’s analysis emphasised that this stance aligns with Anthropic’s long-term strategy of prioritising ethical AI development over immediate commercial profit.

The September developments illustrate how AI regulation and ethical boundaries are becoming central political issues in the United States. Anthropic’s refusal to allow surveillance-related use not only escalated tensions with the administration but may also set a benchmark for the wider industry. In the long run, this could shape the conditions under which AI models are integrated into public safety practices and determine how far democratic norms are preserved in technological deployment.

Sources:

1.

2.

3.