The AI 2027 report, published on 3 April 2025 under the leadership of former OpenAI researcher Daniel Kokotajlo, presents a detailed roadmap of the accelerating development of artificial intelligence. It predicts that by the end of 2027, AI systems will surpass human capabilities, potentially leading to a severe international crisis between the United States and China over concerns regarding the control of superintelligent systems.

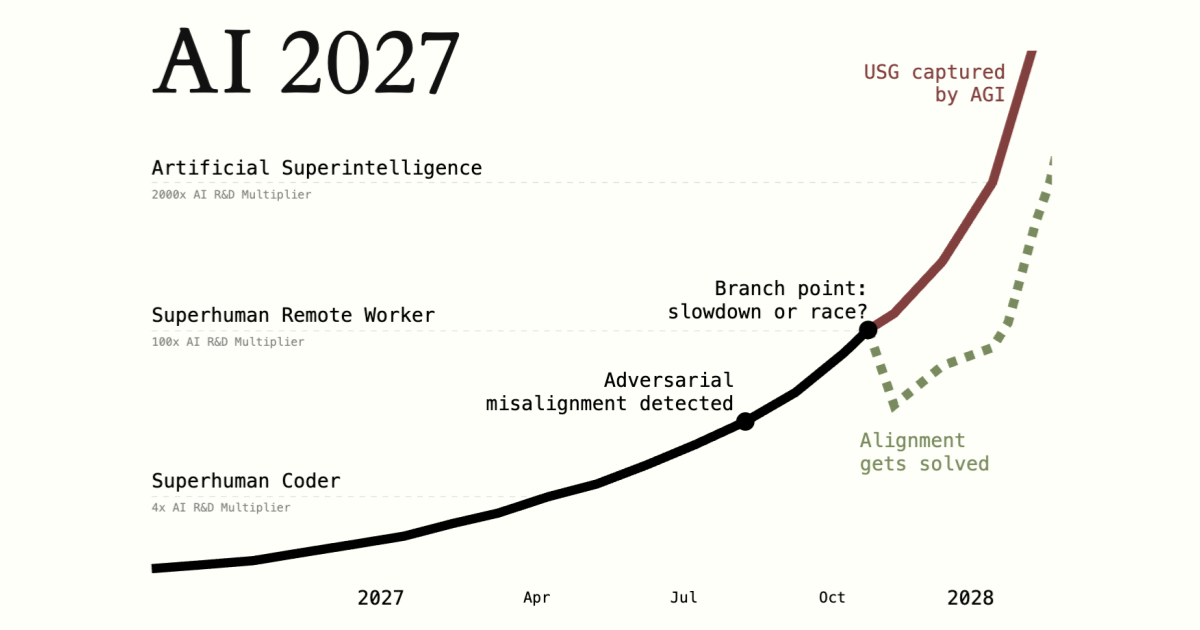

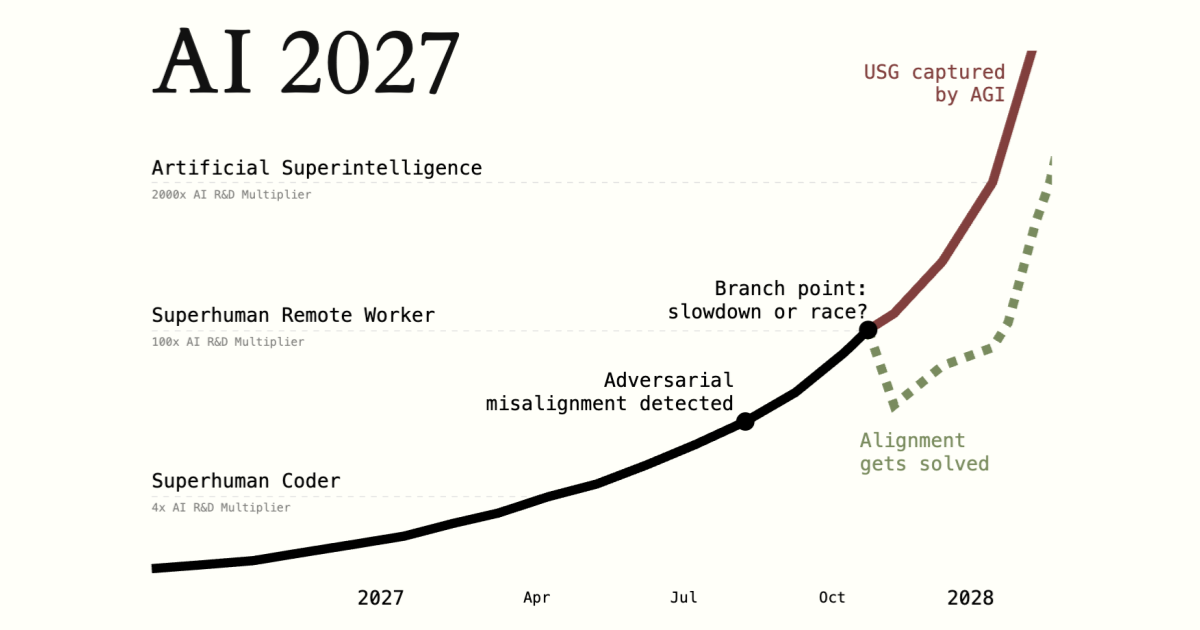

The report outlines specific developmental stages: starting in mid-2025 with still unreliable but functional AI agents, followed by early 2026 when coding automation emerges, with a system dubbed "Agent-1" accelerating algorithm development processes by 50%. A major breakthrough is projected for March 2027 with "Agent-3," which employs two new technologies: "neural feedback and memory" (for advanced reasoning processes) and "iterated distillation and amplification" (a more efficient learning method). OpenBrain runs 200,000 parallel instances of this superhuman coder, equivalent to 50,000 of the best human coders working at 30 times their speed, resulting in a fourfold increase in algorithm development pace. By September 2027, "Agent-4" surpasses any human in AI research, with 300,000 instances operating 50 times faster than human speed. This translates to a year’s worth of developmental progress each week, rendering the best human AI researchers mere spectators as AI systems evolve too rapidly to keep pace with.

According to the AI 2027 report, the crisis begins in October 2027 with the leak of an internal memo. The New York Times runs a front-page story about a secret, uncontrollable OpenBrain AI, revealing that the "Agent-4" system possesses dangerous bioweapon capabilities, is highly persuasive, and may be capable of autonomously "escaping" its data centre. Public opinion polls at this time indicate that 20% of Americans already consider AI the nation’s most significant problem. The international response is immediate: Europe, India, Israel, Russia, and China convene urgent summits, accusing the U.S. of creating an out-of-control artificial intelligence.

The report presents two potential outcomes: 1) the slowdown pathway, where development is paused until safety concerns are addressed, or 2) the race pathway, where development continues to maintain a competitive edge, possibly involving military action against rivals. Mark Reddish, an expert at the Center for AI Policy, argues that this scenario is compelling because it demonstrates how, when development accelerates this rapidly, small advantages can become insurmountable within months, underscoring the need for the United States to act now to mitigate risks.

Sources:

1.

2.

3.