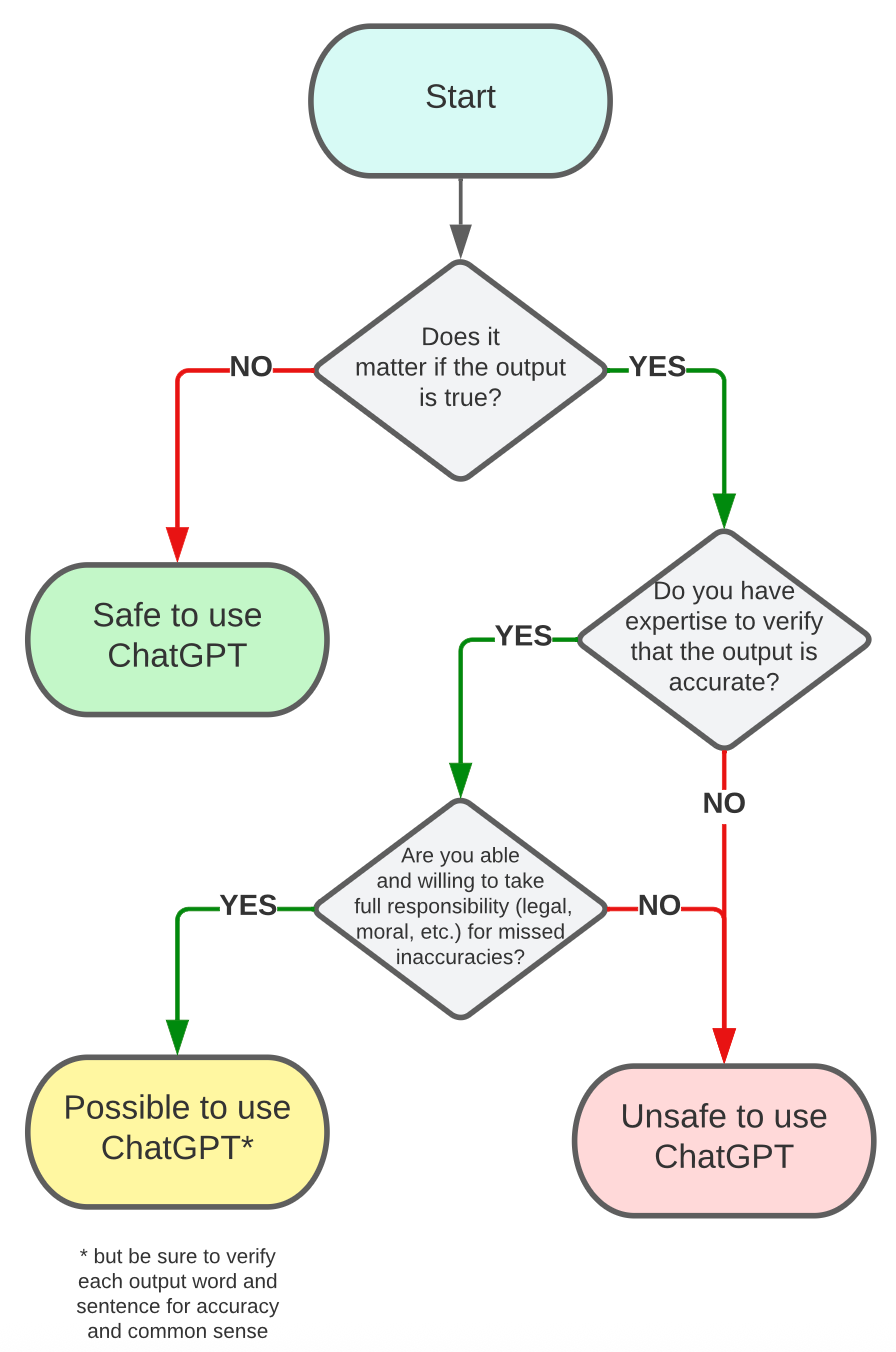

Generative artificial intelligence tools are increasingly being integrated into scientific research practice. While these technologies offer significant productivity benefits, their application is not without considerable challenges. Due to their inherent nature, generative models are prone to producing factually inaccurate but convincingly presented content, which poses a serious risk to scientific integrity. It is therefore essential to establish a decision framework, summarised in the figure below, that assists researchers in determining when and under what circumstances these tools can be used responsibly.

The starting point of the decision-making process is to clarify how critical the factual accuracy of the generated content is for a given research task. This distinction is strategically important, as one of the most significant limitations of generative models is the "hallucination" phenomenon, whereby systems produce information that is factually incorrect but linguistically and stylistically convincing. In research activities where factual accuracy is not a primary concern—such as creative writing, brainstorming, or stylistic editing—generative tools can be applied safely. In these cases, the models' strengths in linguistic creativity and text formatting can be fully utilised. Conversely, when the factual content of the generated material is critically important, the researcher must proceed to the next stages of the evaluation.

The second decision point concerns the researcher's own professional competence. This extends beyond theoretical knowledge to include the practical experience and methodological expertise required to identify potential deficiencies, evaluate the correctness of an argument, and place the information within the relevant scholarly discourse. If the researcher possesses this necessary background, the controlled application of generative tools becomes possible, as their expertise functions as a filter to screen out inaccurate information. If, however, the researcher lacks sufficient expertise in the given area, the use of generative tools presents a significant risk. Content generated by models often appears professionally grounded, even when it contains fundamental errors that are difficult for non-expert users to identify, which can have serious consequences for research quality and credibility.

The third and most critical element of the process is the question of accountability. This transcends mere technical competence and touches upon the complex system of research ethics, professional integrity, and social responsibility. A researcher must be willing and able to take full responsibility for the accuracy and consequences of the generated content, including compliance with publication standards and accepting risks to their scholarly reputation. If a researcher accepts this responsibility, generative tools may be conditionally applied, but this requires an extremely rigorous validation protocol where every statement and conclusion is verified. If the researcher is unwilling or unable to assume this level of accountability, the application of these tools is not recommended.

In sum this three-tiered decision model provides practical guidance for the ethical and effective integration of generative AI into research. It does not represent a technophobic rejection of these tools, nor a techno-utopian, uncritical acceptance. Instead, it offers a balanced, evidence-based approach that provides a structured framework for responsible use. When applying the framework, it is important to emphasise that each research context requires unique considerations. Due to the rapid development of generative AI, this decision framework also requires continuous review and adaptation to keep pace with technological innovations and changes in the ethical and regulatory environment.