Generative AI relies on a specialised branch of machine learning (ML), namely deep learning (DL) algorithms, which employ neural networks to detect and exploit patterns embedded within data. By processing vast volumes of information, these algorithms are capable of synthesising existing knowledge and applying it creatively. As a result, generative AI can be used to perform various natural language processing (NLP) tasks (Yenduri et al. 2024, 54609), such as emotion detection, text summarisation, semantic comparison across multiple sources, and the generation of new texts.

There is no universally accepted definition of generative AI; the term is often used as an umbrella concept. While technically any model that produces an output could be considered generative, the AI research community typically reserves the term for sophisticated models capable of generating high-quality content that resembles human-created outputs (García-Peñalvo & Vázquez-Ingelmo 2023, 7). Generative AI refers to a collection of AI techniques and models developed to learn the hidden, underlying structure of a dataset and to generate new data points that plausibly align with the original data (Pinaya et al. 2023, 2). It is primarily grounded in generative modelling—that is, the estimation of the joint distribution of inputs and outputs—with the aim of inferring a plausible representation of the actual data distribution.

A generative artificial intelligence system encompasses the entire infrastructure, including the model itself, data processing pipelines, and user interface components. The model functions as the central element of the system, facilitating both interaction and application (Feuerriegel et al. 2024, 112). Thus, the concept of generative AI extends beyond the purely technical foundations of generative models to incorporate additional, functionally relevant characteristics of specific AI systems (Feuerriegel et al. 2024). According to the current state of the literature, in a broader context, the term generative AI is generally used to refer to the creation of tangible synthetic content through AI-based tools (García-Peñalvo & Vázquez-Ingelmo 2023, 14). In a narrower sense, however, the AI research community often focuses on generative applications in terms of the underlying models used, and may not necessarily classify their work under the label of generative AI (Ronge, Maier, & Rathgeber 2024, 1).

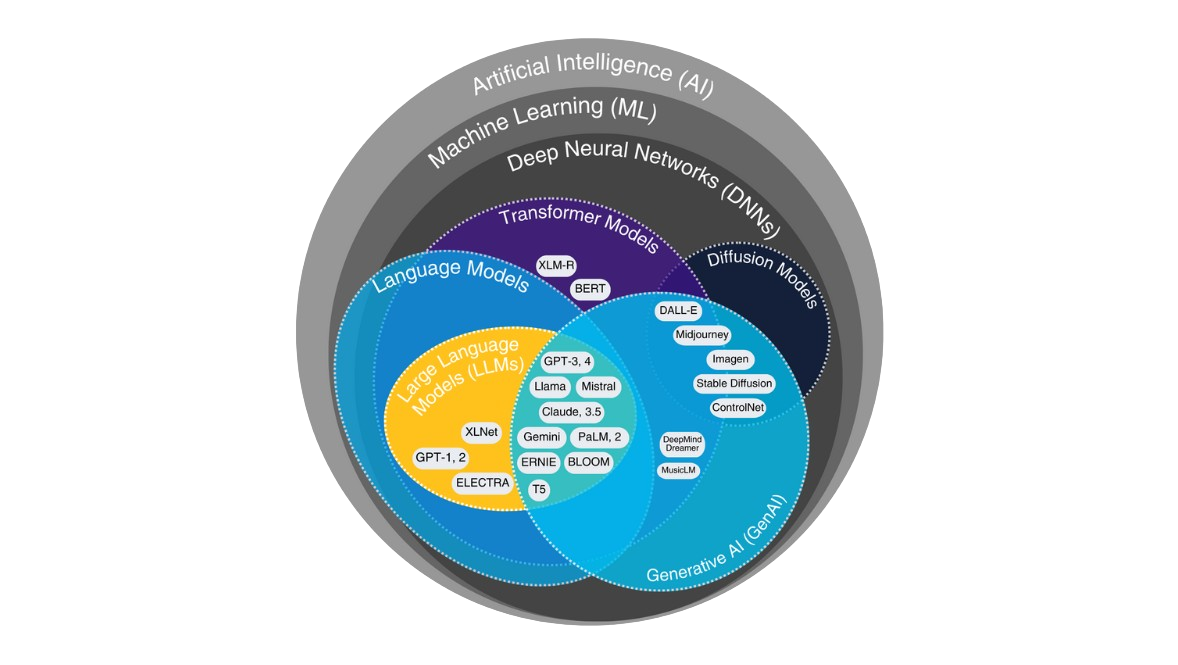

In sum, generative AI is a specialised branch of artificial intelligence that employs advanced deep learning models based on neural networks. As illustrated in Figure 2, this field encompasses several key architectural families, most notably Transformer Models and Diffusion Models. Within the Transformer architecture, Large Language Models (LLMs) represent a powerful class of models pre-trained on vast corpora of linguistic data. This enables generative AI to produce novel, previously unseen synthetic content in various formats and to support a wide range of tasks through generative modelling.

Fig. 2. From Artificial Intelligence to Generative AI

References:

1.Feuerriegel, Stefan, Jochen Hartmann, Christian Janiesch, and Patrick Zschech. 2024. ‘Generative AI’. Business & Information Systems Engineering 66 (1): 111–26. doi:10.1007/s12599-023-00834-7 – ^ Back

2. García-Peñalvo, Francisco, and Andrea Vázquez-Ingelmo. 2023. ‘What Do We Mean by GenAI? A Systematic Mapping of The Evolution, Trends, and Techniques Involved in Generative AI’. International Journal of Interactive Multimedia and Artificial Intelligence 8 (4): 7. doi:10.9781/ijimai.2023.07.006 – ^ Back

4. Goodfellow, Ian J., Jean Pouget-Abadie, Mehdi Mirza, Bing Xu, David Warde-Farley, Sherjil Ozair, Aaron Courville, and Yoshua Bengio. 2014. ‘Generative Adversarial Nets’. Advances in Neural Information Processing Systems 27. ^ Back

3. Pinaya, Walter H. L., Mark S. Graham, Eric Kerfoot, Petru-Daniel Tudosiu, Jessica Dafflon, Virginia Fernandez, Pedro Sanchez, et al. 2023. ‘Generative AI for Medical Imaging: Extending the MONAI Framework’. arXiv. doi:10.48550/ARXIV.2307.15208 – ^ Back

4. Ronge, Raphael, Markus Maier, and Benjamin Rathgeber. 2024. ‘Defining Generative Artificial Intelligence: An Attempt to Resolve the Confusion about Diffusion’. (Kézirat). ^ Back

5. Varga, László, and Yulia Akhulkova. 2023. ‘The Language AI Alphabet: Transformers, LLMs, Generative AI, and ChatGPT’. Nimdzi. https://www.nimdzi.com/the-language-ai-alphabet-transformers-llms-generative-ai-and-chatgpt/ – ^ Back

6. Yenduri, Gokul, M. Ramalingam, G. Chemmalar Selvi, Y. Supriya, Gautam Srivastava, Praveen Kumar Reddy Maddikunta, G. Deepti Raj, et al. 2024. ‘GPT (Generative Pre-Trained Transformer)— A Comprehensive Review on Enabling Technologies, Potential Applications, Emerging Challenges, and Future Directions’. IEEE Access 12: 54608–49. doi:10.1109/ACCESS.2024.3389497 – ^ Back