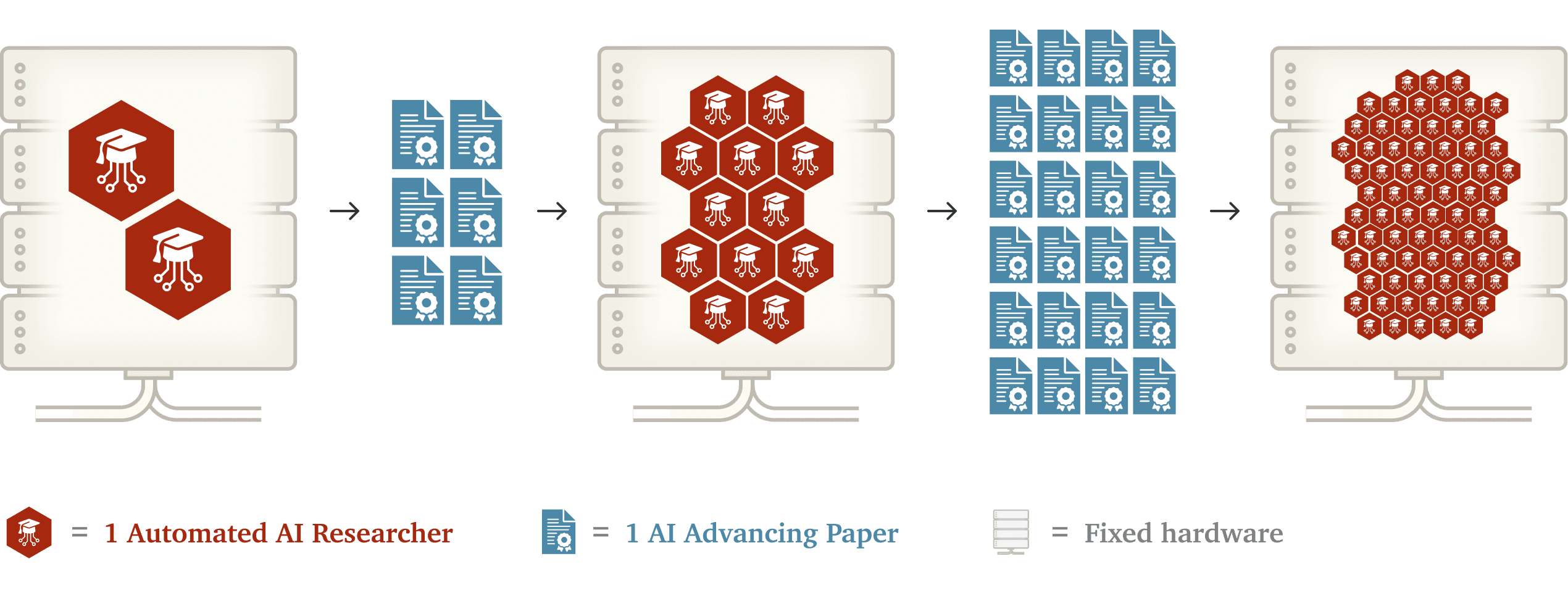

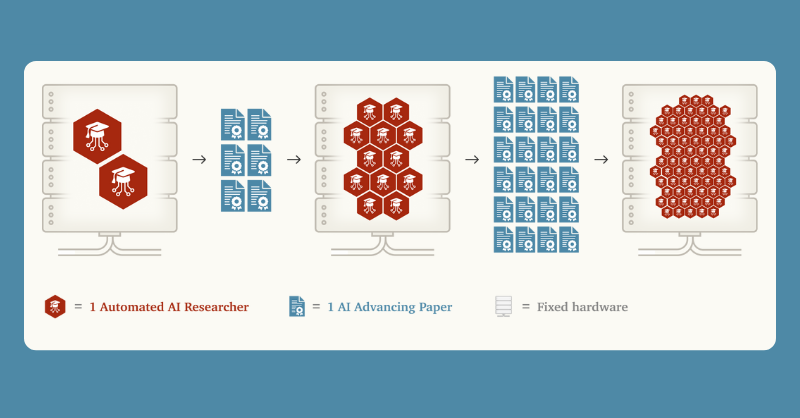

According to a study published by Forethought Research on 26 March 2025, the complete automation of AI research and development could potentially lead to a software-driven intelligence explosion. The researchers examined what happens when AI systems become capable of fully automating their own development processes, creating a feedback loop where each new system generates even more advanced AI, potentially within months.

Empirical data indicate that the efficiency of AI software roughly doubles every six months, and this progress is likely outpacing the growth rate of research resources. Analyses suggest that AI tools currently generate over 25% of Google’s entire source code automatically. At the same time, at Amazon, they have saved approximately 4,500 developer-years of work and an estimated $260 million in annual efficiency gains. The study identified two main obstacles to the full automation of AI systems for AI R&D (AI Systems for AI R&D Automation, ASARA) and a software-driven intelligence explosion (Software Intelligence Explosion, SIE): fixed computational capacity and the lengthy training times for new AI systems. However, the study argues that these limitations are unlikely to entirely prevent the emergence of an SIE, as algorithmic advancements have consistently improved training efficiency in the past—AI experiments and training processes may gradually become faster, enabling continued acceleration despite these barriers.

The pace of technological advancement could easily outstrip society’s ability to prepare, prompting the study to propose specific safety measures to manage the risks of a software-driven intelligence explosion. An SIE could lead to an extremely rapid evolution of current AI capabilities, necessitating the prior development of appropriate regulations. Proposed measures include continuous monitoring of software development, preemptive evaluation of AI systems’ development capabilities, and establishing a threshold that companies commit not to exceed without adequate safety measures, thereby ensuring the safe progression of technological advancement.

Source:

1.

2.

3.