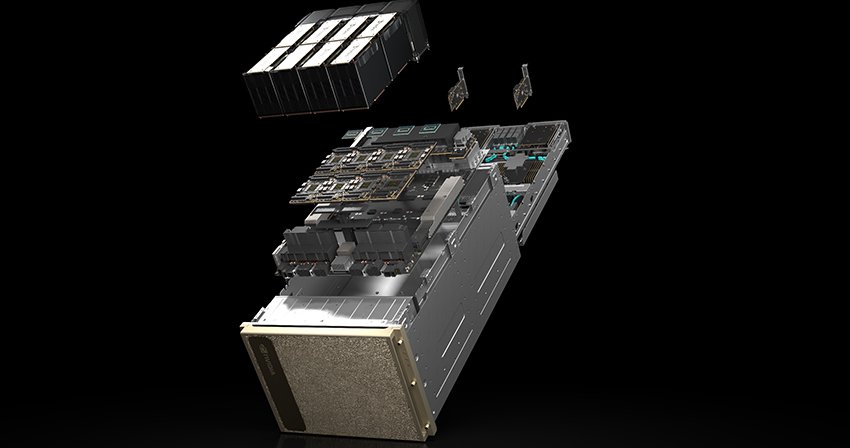

AI infrastructure startup Parasail officially launched its AI Deployment Network platform on 2 April 2025, offering real-time, on-demand access to GPU resources, including Nvidia's H100, H200, A100, and 4090 GPUs, at significantly lower costs than traditional cloud providers. The company's proprietary orchestration engine automatically matches workloads with its global network of GPUs, thereby optimising performance and reducing idle costs.

By aggregating resources from global GPU providers, Parasail has created a GPU fleet that the company claims is larger than Oracle's entire GPU fleet. According to CEO Mike Henry, while legacy cloud providers lure customers into long-term contracts with small amounts of computing capacity, Parasail offers true on-demand access without hidden constraints. This allows companies to deploy AI models and access dozens of GPUs within hours, with minimal setup or configuration. Parasail claims customers can benefit from a two-to-five times cost advantage over other infrastructure providers, and reduce costs by 15-30 times when migrating from OpenAI or Anthropic.

Early adopters include Elicit Research PBC, which screens more than 100,000 scientific papers daily using its AI assistant, and Weights & Biases, which used Parasail's service to deploy the popular DeepSeek R1 reasoning model. Henry believes that the future of AI infrastructure isn't about a single cloud provider but an interconnected network of high-performance compute providers. While the market is highly competitive with players like CoreWeave, GMI Cloud, Together Computer, and Storj Labs, Henry isn't concerned about oversaturation and believes the industry is "just getting started".

Sources:

1.

2.

3.