In July 2025, a serious privacy flaw was discovered in the Meta AI application that caused users' conversations to automatically become public due to default settings, making personal questions and answers visible to anyone, which most users were unprepared for. The root of the problem was the app's discovery feature, which is enabled by default and which most users were unaware of. When a user asks a question to the Meta AI chatbot, the conversation is automatically shared in the public feed unless the user explicitly disables this feature in the application settings. According to India Today, on July 16th, a white hat hacker detected and reported the bug to Meta's security team, for which they received a $100,000 reward (approximately 8.5 million rupees) from the company.

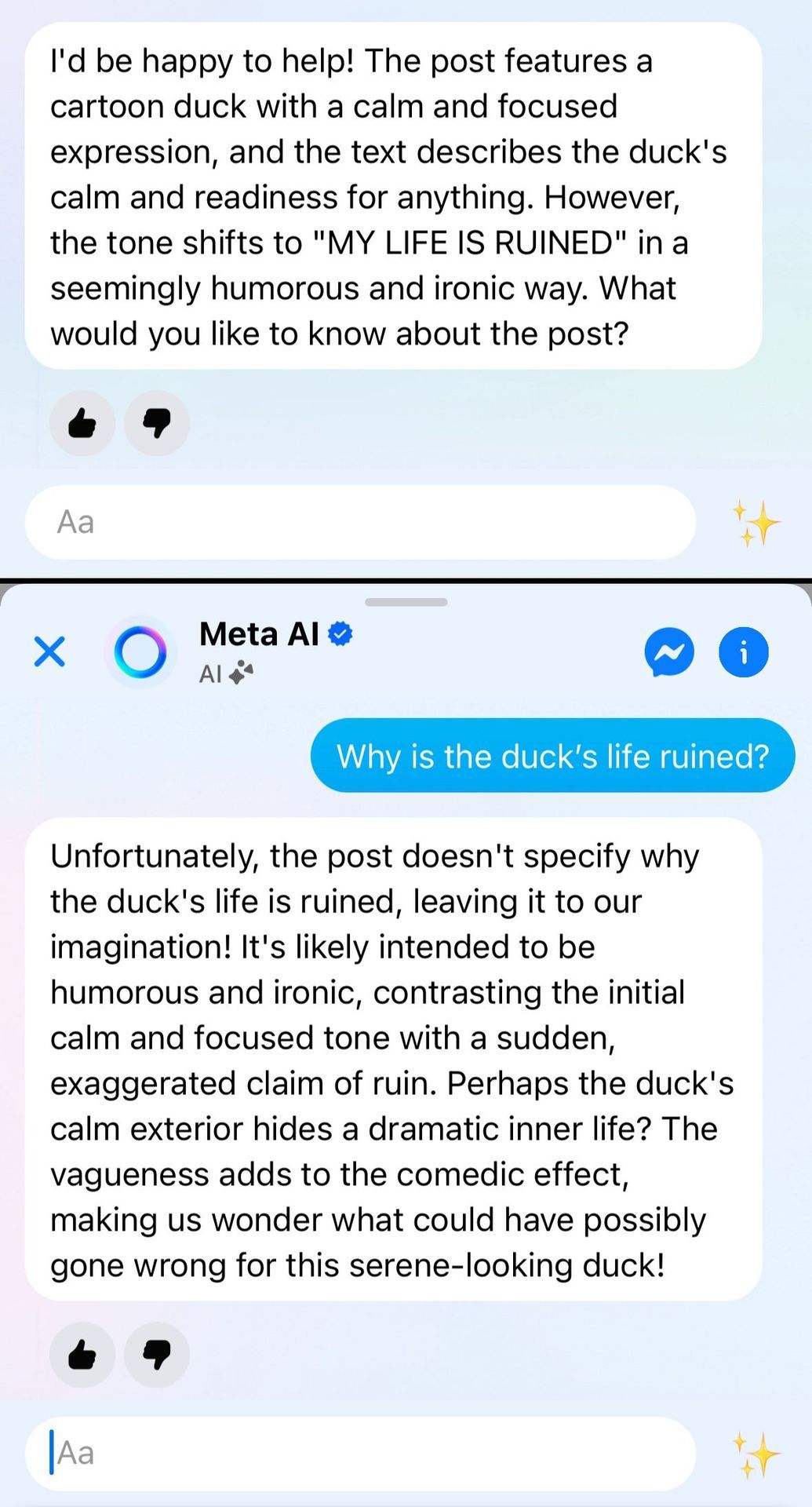

Based on PCMag's investigation, the feature is particularly problematic as many users were using the AI assistant as if it were completely private, asking personal questions about health issues, financial advice, or even romantic relationships, not knowing these conversations were publicly viewable. A Meta spokesperson acknowledged the issue but emphasised that the feature was a deliberate design decision, and the application does indeed warn about sharing, although many ignore or misunderstand this. According to Cooper Quintin, a privacy expert at the Electronic Frontier Foundation, this practice is a classic example of deceptive design as Meta does not make it sufficiently clear to users that their conversations will be public, and should use an opt-in approach rather than an opt-out method when sharing such sensitive data.

The scandal has generated significant user backlash, and according to Ground News, Meta AI application downloads decreased by 47% in the week following the disclosure of the bug. Meta responded quickly to the criticism and released an update on July 19th, 2025, which changed the default settings so that conversations are now private by default, and users must explicitly authorize sharing. Additionally, the company announced that it would retroactively make all previously publicly shared conversations private and notify those users whose conversations were previously visible in the public forum. According to experts, this case serves as a reminder to technology companies of the importance of transparent privacy practices, especially with the growing popularity of AI assistants that handle increasingly more personal information.

Sources:

Meta’s AI app has sparked privacy concerns after users unknowingly shared chatbot conversations publicly. Many didn’t realize their chats were visible via the “Share” feature.