While the concept of generative models has existed for decades, the field began to accelerate in the mid-to-late 2010s, building upon foundational advances in deep learning. According to Bill Gates, founder of Microsoft, these tools represent the most important technological advance since the advent of the graphical user interface in the 1980s (Gates 2023).

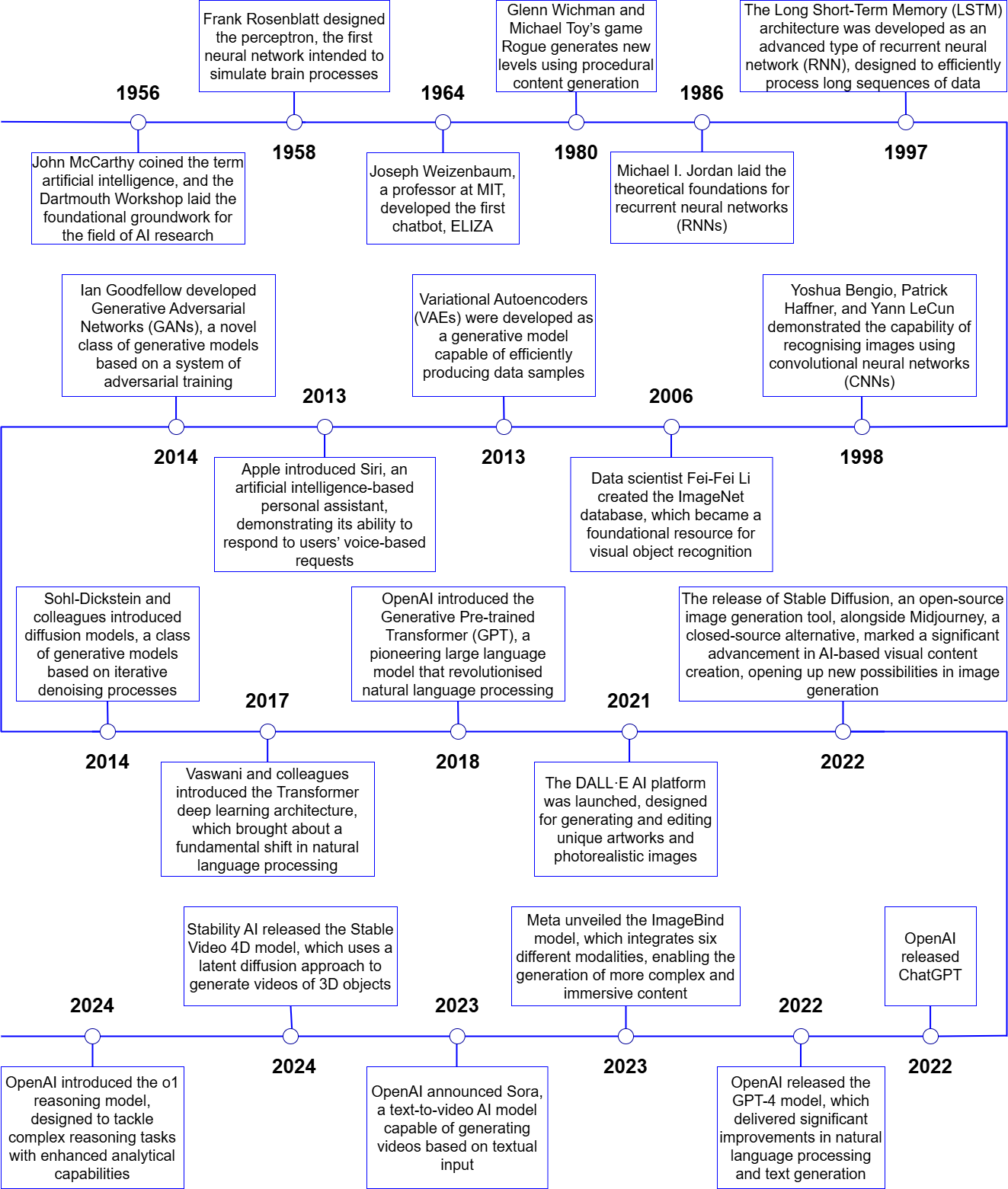

The mid-2010s saw the emergence of several foundational generative architectures. Variational Autoencoders (VAEs) were introduced in 2013 (VAEs, Kingma & Welling 2019), followed by the introduction of Generative Adversarial Networks (GANs) in 2014, which enabled models to generate new data like photorealistic images (Goodfellow et al. 2014). The theoretical groundwork for diffusion models also appeared in this period (Sohl-Dickstein et al. 2015). Crucially for language, the Transformer architecture (Vaswani et al. 2017) provided the scalable foundation for the subsequent development of large language models. Building directly on this, OpenAI introduced the Generative Pre-trained Transformer (GPT) in 2018, followed by the much larger GPT-2 in 2019, which demonstrated a remarkable ability to produce coherent, human-like text (Radford et al. 2018; Radford et al. 2019).

From the 2020s onwards, generative AI technologies became widely accessible, ushering in a new era in the practical applications of artificial intelligence. In August 2022, Stable Diffusion was released, enabling the generation of realistic and imaginative images from textual descriptions (Rombach et al. 2022). This was soon followed, in November 2022, by the release of ChatGPT, an interactive language model capable of real-time communication by generating text-based responses to user queries and prompts (OpenAI 2022). Owing to its advanced natural language processing capabilities, the chatbot can respond to complex questions, produce creative texts, and even generate code—thus revolutionising the deployment of generative AI. The key milestones in the development of generative AI are summarised in Figure 3.

The release of OpenAI’s GPT-4 model (OpenAI 2023a), along with newly introduced functionalities—such as browser plug-ins (OpenAI 2023b) and third-party extensions (custom GPTs)—further expanded the application potential of generative AI. ChatGPT rapidly emerged as one of the fastest-growing consumer applications, reaching one million users within just five days of its launch in November 2022 (Brockman 2022). As of the latest figures from December 2024, the platform boasts 300 million weekly active users (OpenAI Newsroom 2024). The developmental trajectory shaped by generative adversarial networks, Transformer architectures, and GPT models has produced generative systems that are now applicable across virtually all domains—from the creative industries to scientific research.

Fig. 3. The Evolution of Generative AI: Key Milestones and Breakthroughs

References:

1. Brockman, Greg. 2022. ‘ChatGPT Just Crossed 1 Million Users; It’s Been 5 Days since Launch.’ X. https://x.com/gdb/status/1599683104142430208 – ^ Back

2. Gates, Bill. 2023. ‘The Age of AI Has Begun’. GatesNotes. 21. – https://www.gatesnotes.com/The-Age-of-AI-Has-Begun – ^ Back

3. Goodfellow, Ian J., Jean Pouget-Abadie, Mehdi Mirza, Bing Xu, David Warde-Farley, Sherjil Ozair, Aaron Courville, and Yoshua Bengio. 2014. ‘Generative Adversarial Networks’. arXiv. doi:10.48550/ARXIV.1406.2661 – ^ Back

4. Kingma, Diederik P. & Max Welling. An introduction to variational autoencoders. Foundations and Trends® in Machine Learning, 12(4), 2019. 307–392. ^ Back

7. Mikolov, Tomas, Ilya Sutskever, Kai Chen, Greg Corrado, and Jeffrey Dean. 2013. ‘Distributed Representations of Words and Phrases and Their Compositionality’. arXiv. doi:10.48550/ARXIV.1310.4546 – ^ Back

8. OpenAI. 2022. Introducing ChatGPT. Available at: https://openai.com/index/chatgpt/ ^ Back

9. OpenAI. 2023a. GPT-4 Technical Report. Available at: https://cdn.openai.com/papers/gpt-4.pdf ^ Back

10. OpenAI. 2023b. ChatGPT Plugins. Available at: https://openai.com/index/chatgpt-plugins/ ^ Back

11. OpenAI Newsroom. 2024. ‘Fresh Numbers Shared by @sama Earlier Today’. X. 04. https://x.com/OpenAINewsroom/status/1864373399218475440 – ^ Back

12. Radford, Alec, Karthik Narasimhan, Tim Salimans, and Ilya Sutskever. 2018. ‘Improving Language Understanding by Generative Pre-Training’. Download PDF – ^ Back

13. Radford, Alec, Jeffrey Wu, Rewon Child, David Luan, Dario Amodei, and Ilya Sutskever. 2019. ‘Language Models Are Unsupervised Multitask Learners’. Download PDF – ^ Back

14. Rombach, Robin, Andreas Blattmann, Dominik Lorenz, Patrick Esser, and Björn Ommer. 2022. ‘High-Resolution Image Synthesis with Latent Diffusion Models’. arXiv. doi:10.48550/ARXIV.2112.10752 – ^ Back

15. Sohl-Dickstein, Jascha, Eric Weiss, Niru Maheswaranathan & Surya Ganguli. Deep unsupervised learning using nonequilibrium thermodynamics. In International Conference on Machine Learning, pp. 2256–2265. PMLR, 2015. ^ Back

16. Vaswani, Ashish, Noam Shazeer, Niki Parmar, Jakob Uszkoreit, Llion Jones, Aidan N. Gomez, Lukasz Kaiser, and Illia Polosukhin. 2017. ‘Attention Is All You Need’. arXiv. doi:10.48550/ARXIV.1706.03762 – ^ Back