European privacy advocacy group NOYB warned Meta on May 14, 2025, after the company announced it would use user data to train its AI models starting May 27. The letter, signed by Max Schrems, emphasises that Meta's claim of "legitimate interest" violates GDPR as it collects data without users' explicit consent, offering only an "opt-out" option instead, which could result in severe legal consequences.

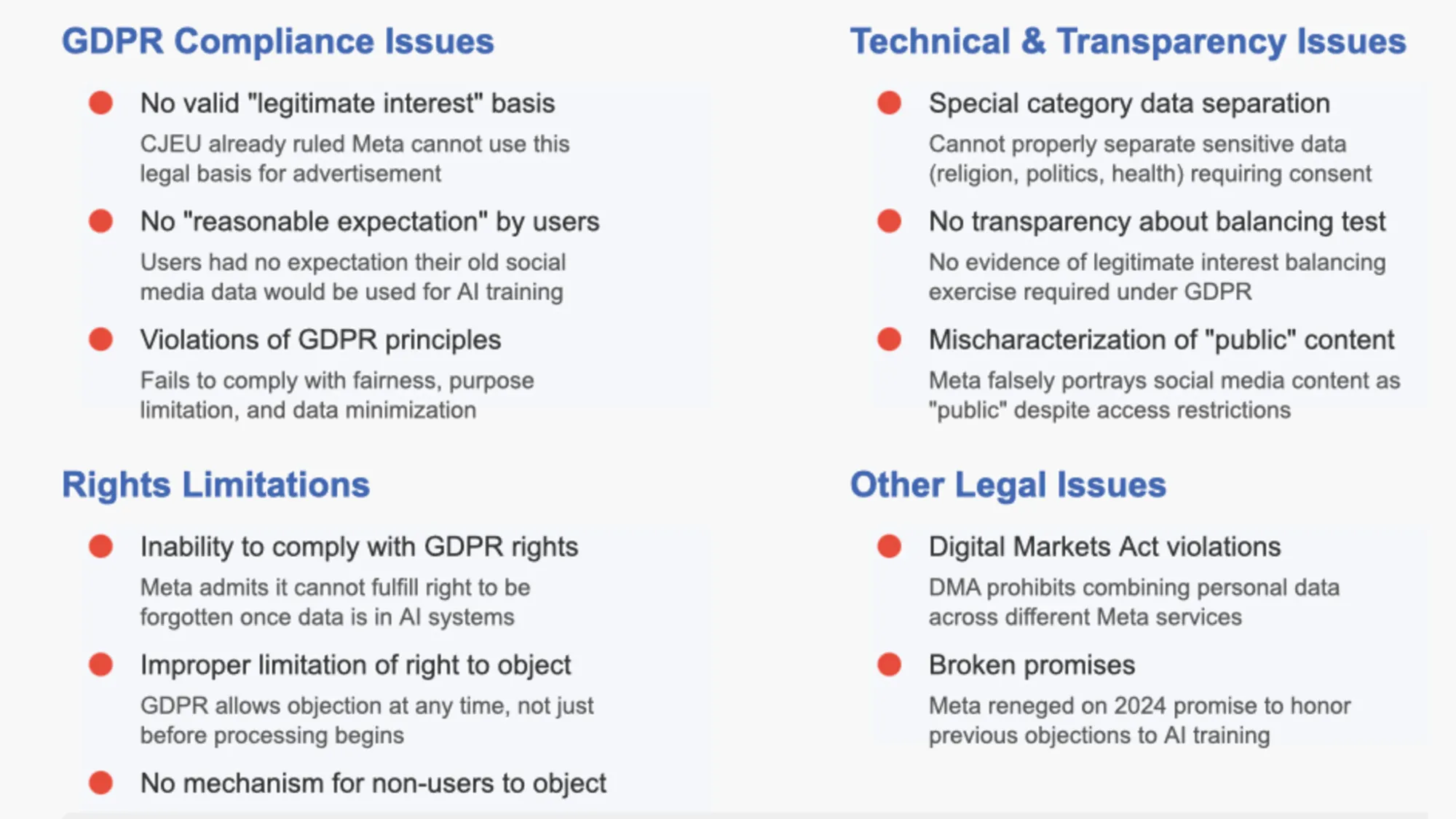

Meta informed users in April 2024 that it would train its AI models with their personal data, after previously postponing the process due to pressure from the Irish Data Protection Commissioner. NOYB argues that under GDPR, Meta cannot claim "legitimate interest" for training general-purpose AI models, as the European Court of Justice has already determined that the company cannot use a similar legal basis for targeted advertising. According to Max Schrems, Meta simply claims that "its interest in making money is more important than the rights of its users," while consent from just 10% of its 400 million European users would be sufficient for adequate language learning and other training purposes, similar to how other AI providers like OpenAI or French Mistral manage without social media data.

NOYB, as a Qualified Entity under the new EU Collective Redress Directive, can bring proceedings in various jurisdictions, not just at Meta's Irish headquarters. If a court grants the injunction, Meta would have to not only stop the processing but also delete any illegally trained AI system. If EU data is "mixed" with non-EU data, the entire AI model would need to be deleted. Each day Meta continues to use European data for AI training increases potential damage claims – if non-material damage were only €500 per user, it would amount to around €200 billion for the approximately 400 million European Meta users. Meta rejected NOYB's arguments in a statement to Reuters, claiming they are "wrong on the facts and the law."

Sources:

1.

2.

3.