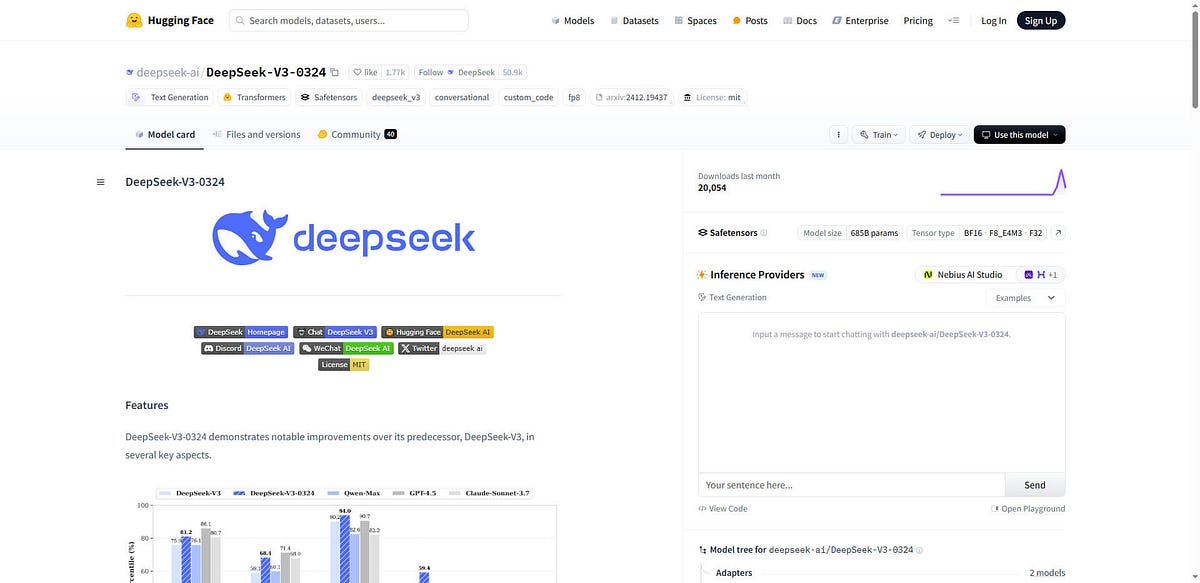

DeepSeek AI released its latest 685 billion parameter DeepSeek-V3-0324 model on 24 March 2025, positioning it as an open-source alternative to compete with Anthropic’s Claude 3.7 Sonnet model. The new model demonstrates significant advancements in coding, mathematical tasks, and general problem-solving, while being freely available under an MIT licence.

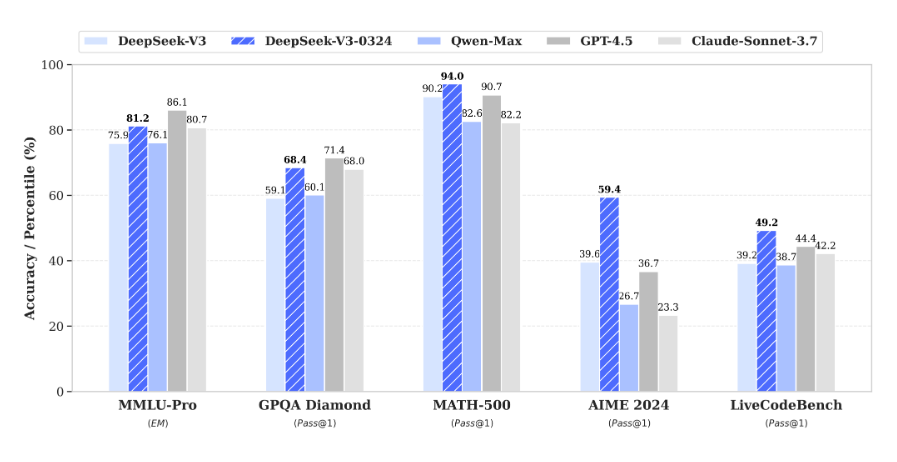

The DeepSeek-V3-0324 employs a Mixture-of-Experts (MoE) architecture, activating only 37 billion parameters per token, enabling efficient operation even with limited resources. Its benchmark results are impressive: on the MMLU-Pro test, which measures multi-subject language understanding, performance improved from 75.9% to 81.2% (+5.3 points); on the GPQA test, assessing complex general knowledge, it rose from 59.1% to 68.4% (+9.3 points); and on the AIME test, evaluating medical context interpretation, it jumped from 39.6% to 59.4% (+19.8 points). In the LiveCodeBench test, which measures real-world programming tasks, the score increased from 39.2% to 49.2%, marking a 10-point improvement.

The DeepSeek-V3-0324 is widely applicable across various industries, including the financial sector (complex analytics and risk assessment), healthcare (supporting medical research and diagnostic tools), software development (automated code generation and error analysis), and telecommunications (optimising network architectures). The model is accessible through various frameworks such as SGLang (for NVIDIA/AMD GPUs), LMDeploy, and TensorRT-LLM, with quantised versions in 1.78–4.5-bit GGUF formats also released, enabling local use even on less powerful hardware.

Sources:

1.

2.

3.

4.